Axon Server, from local install to full-featured cluster in the cloud

Axon Server is AxonIQ's flagship product and a companion to the Open-Source Axon Framework. It is available in two editions, Standard and Enterprise. Axon Server Standard Edition (SE) is available under the AxonIQ Open Source license, while Axon Server Enterprise Edition (EE) is licensed as a commercial product with a full range of support options.

In this blog series, I would like to take you along installing Axon Server for several different scenarios, starting with a local installation of Axon Server Standard Edition as a “normal” application, via Docker and Docker-compose, to Kubernetes, to eventually arrive at a full cluster of Axon Server Enterprise Edition on Cloud VMs. Of course, all platform examples can be run using both editions. Still, I wouldn’t expect you to run a three-node EE cluster on your laptop, just as it doesn’t make sense to go for a large production microservice architecture using a single-node SE install.

In this first blog, we’ll focus on getting a feel for the work involved in setting up a scripted installation, and you’ll need a typical development machine with Java 8 or 11. Although all my examples will use a regular GNU bash environment and work on MacOS, Linux, and WSL, you should also be fine with a Windows Command shell. For Axon Server, head over to the download page on the AxonIQ website and get the QuickStart package, which includes an executable JAR file of the Open Source Axon Server Standard Edition. If you have a license for the Enterprise Edition, you’ll have received a ZIP file with the corresponding JAR file as well as a license file. Please make sure you have a copy of that last one, as Axon Server EE will not start without it. The documentation for Axon Server is part of the Axon reference guide. Lastly, I’ll sometimes use tools like “curl” and “jq” to talk to Axon Server through its REST API, and those should be available from the common default installation repositories for your OS or “homebrew”-like tool collections.

First Up

Let’s start by just looking at what a clean install looks like. For this, we’ll run an SE instance, so unpack the QuickStart zip-file and copy the two JAR files in the AxonServer subdirectory to an empty directory:

$ unzip AxonQuickStart.zip

…

$ mkdir server-se

$ cp axonquickstart-4.3/AxonServer/axonserver-4.3.jar server-se/axonserver.jar

$ cp axonquickstart-4.3/AxonServer/axonserver-cli-4.3.jar server-se/axonserver-cli.jar

$ cd server-se

$ chmod 755 *.jar

$ ./axonserver.jar

_ ____

/ \ __ _____ _ __ / ___| ___ _ ____ _____ _ __

/ _ \ \ \/ / _ \| '_ \\___ \ / _ \ '__\ \ / / _ \ '__|

/ ___ \ > < (_) | | | |___) | __/ | \ V / __/ |

/_/ \_\/_/\_\___/|_| |_|____/ \___|_| \_/ \___|_|

Standard Edition Powered by AxonIQ

version: 4.3

2020-02-20 11:56:33.761 INFO 1687 --- [ main] io.axoniq.axonserver.AxonServer : Starting AxonServer on arrakis with PID 1687 (/mnt/d/dev/AxonIQ/running-axon-server/server/axonserver.jar started by bertl in /mnt/d/dev/AxonIQ/running-axon-server/server)

2020-02-20 11:56:33.770 INFO 1687 --- [ main] io.axoniq.axonserver.AxonServer : No active profile set, falling back to default profiles: default

2020-02-20 11:56:40.618 INFO 1687 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat initialized with port(s): 8024 (http)

2020-02-20 11:56:40.912 INFO 1687 --- [ main] A.i.a.a.c.MessagingPlatformConfiguration : Configuration initialized with SSL DISABLED and access control DISABLED.

2020-02-20 11:56:49.212 INFO 1687 --- [ main] io.axoniq.axonserver.AxonServer : Axon Server version 4.3

2020-02-20 11:56:53.306 INFO 1687 --- [ main] io.axoniq.axonserver.grpc.Gateway : Axon Server Gateway started on port: 8124 - no SSL

2020-02-20 11:56:53.946 INFO 1687 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 8024 (http) with context path ''

2020-02-20 11:56:53.948 INFO 1687 --- [ main] io.axoniq.axonserver.AxonServer : Started AxonServer in 21.35 seconds (JVM running for 22.317)

So what we see here is Axon Server announcing itself, starting up without any security configured, and using the default ports 8024 for HTTP and 8124 for gRPC. You can open the management UI at "http://localhost:8024" and see a summary of its configuration, but if you question it at the REST interface, you’ll get more details:

$ curl -s http://localhost:8024/v1/public/me | jq

{

"authentication": false,

"clustered": false,

"ssl": false,

"adminNode": true,

"developmentMode": false,

"storageContextNames": [

"default"

],

"contextNames": [

"default"

],

"httpPort": 8024,

"grpcPort": 8124,

"internalHostName": null,

"grpcInternalPort": 0,

"name": "arrakis",

"hostName": "arrakis"

}

Several parts from this answer we covered already: no authentication or SSL, no clustering (Standard Edition), and default ports. There is a single context, named “default,” used by Axon Framework applications, and Axon Server provides an Event Store and communication services. The event store provides a Write-Once Read-Many copy of events. The internal hostname and internal gRPC port are only used in a clustered setup for communication between cluster nodes.

The name and hostname are important, and by default, set to the hostname of the system Axon Server is running on. You can force values for these using the “axonserver.properties” file, with settings “axoniq.axonserver.name” and “axoniq.axonserver.hostname,” respectively. Additionally, you can specify a domain with “axoniq.axonserver.domain”. The first is used to distinguish between servers in a cluster and generally equal to the hostname. The other two can change what is communicated back to a client about how to reach the server. The hostname may bite you even if you do not expose Axon Server under some externally defined name. For example, if you run it in Docker, the hostnames are randomly generated (by default). (and not the same as the container name, which is some cutesy pronounceable name) For Docker scenarios, you may be better off having it publicize itself as “localhost.”

Differences with Axon Server EE

As noted, some of the values you saw from the “/v1/public/me” endpoint were EE-specific. For example, if I start Axon Server EE in a clean directory, I will get:

$ curl -s http://localhost:8024/v1/public/me | jq

{

"authentication": false,

"clustered": true,

"ssl": false,

"adminNode": false,

"developmentMode": false,

"storageContextNames": [],

"contextNames": [],

"internalHostName": "arrakis",

"grpcInternalPort": 8224,

"grpcPort": 8124,

"httpPort": 8024,

"name": "arrakis",

"hostName": "arrakis"

}

As you can see, the “internal” values are now filled, but, maybe surprisingly, the context name arrays are empty. This is because an Axon Server EE node can have many different roles in a cluster: it may be a full admin node, providing services to configure the cluster and keep it running, next to the “regular” event store and messaging functions. However, it can also be configured to only service-specific contexts in varying degrees of involvement, which we will come back to later on. Since it does not know yet what roles it will be required to play, the node exists in (empty) isolation until you add it to the cluster and choose. To “convert” a group of uninitialized EE nodes into a cluster, you need to select one of them as starting point and run the “init-cluster” command on it. You can do this with the CLI tool:

$ ./axonserver-cli.jar init-cluster

$ curl -s http://localhost:8024/v1/public/me | jq

{

"authentication": false,

"clustered": true,

"ssl": false,

"adminNode": true,

"developmentMode": false,

"storageContextNames": [ "default" ],

"contextNames": [ "_admin", "default" ],

"name": "axonserver-0",

"hostName": "axonserver-0",

"internalHostName": "axonserver-0",

"grpcInternalPort": 8224,

"grpcPort": 8124,

"httpPort": 8024

}

The init-cluster command itself will show no response if all goes well, but the response from the “/v1/public/me” REST endpoint shows that the node now considers itself an “admin” node. Also, it now knows about the “default” context we saw earlier, as well as a new “_admin” context. All admin nodes are members of the “_admin” context, and it is used to distribute cluster structure data in the same way as it distributes Events, Commands, and Queries. The other nodes can be added to the cluster using the “register-node” command, pointing it at an admin node already in the cluster:

$ ./axonserver-cli.jar register-node -h axonserver-0

$ curl -s http://localhost:8024/v1/public/me | jq

{

"authentication": false,

"clustered": true,

"ssl": false,

"adminNode": true,

"developmentMode": false,

"storageContextNames": [ "default" ],

"contextNames": [ "_admin", "default" ],

"name": "axonserver-1",

"hostName": "axonserver-1",

"internalHostName": "axonserver-1",

"grpcInternalPort": 8224,

"grpcPort": 8124,

"httpPort": 8024

}

$ curl -s http://localhost:8024/v1/public/context | jq

[

{

"metaData": {},

"nodes": [ "axonserver-0", "axonserver-1" ],

"leader": "axonserver-0",

"pendingSince": 0,

"changePending": false,

"roles": [

{ "role": "PRIMARY", "node": "axonserver-0" },

{ "role": "PRIMARY", "node": "axonserver-1" }

],

"context": "_admin"

},

{

"metaData": {},

"nodes": [ "axonserver-0", "axonserver-1" ],

"leader": "axonserver-0",

"pendingSince": 0,

"changePending": false,

"roles": [

{ "role": "PRIMARY", "node": "axonserver-0" },

{ "role": "PRIMARY", "node": "axonserver-1" }

],

"context": "default"

}

]

The new node has a different name, but otherwise, the “/v1/public/me” endpoint shows no differences; “axonserver-1” also is an admin node. Now, if we look at the “/v1/public/context” endpoint, we see the two nodes in both contexts and with the role “PRIMARY.” Also, both contexts have a leader—more on that in the next section.

A new feature for 4.3 is that you can use the configuration setting “...autocluster.first” to provide it with a known Admin node hostname. If that name happens to be the node's hostname starting up, it will automatically perform the “init-cluster” command on itself if needed. If the name is not the current hostname, it will schedule a task to perform the “register-node” command, which will keep trying until successful, which is when the first node is itself available and initialized. If you want, you can use the “...autocluster.contexts” setting to provide a comma-separated list of contexts to create or join. (Please note you have to explicitly add the “_admin” context if you want all nodes to become admin nodes.) This auto cluster feature is handy in the context of deployment from a single source, such as with Kubernetes or a modern DevOps pipeline.

Clusters and Contexts

A cluster of Axon Server nodes will provide multiple connection points for (Axon Framework-based) client applications and thus share the load of managing message delivery and event storage. All nodes serving a particular context maintain a complete copy, with a “context leader” controlling the distributed transaction. The leader is determined by-elections, following the RAFT protocol. In this blog, we are not diving into the details of RAFT and how it works, but an important consequence has to do with those elections: nodes need to win them or at least feel the support of a clear majority. So while an Axon Server cluster does not need to have an odd number of nodes, every individual context does prevent the chance for a draw in an election. This also holds for the internal context named “_admin,” which the admin nodes use and stores the cluster structure data. Consequently, most clusters will have an odd number of nodes and will keep functioning as long as a majority (for a particular context) is responding and storing events.

A node can have different roles in a context:

- A “PRIMARY” node is a fully functional (and voting) member of that context. Thus, a majority of primary nodes are needed for a context to be available to client applications.

- A “MESSAGING_ONLY” member will not provide event storage and (as it is not involved with the transactions) is a non-voting member of the context.

- An “ACTIVE_BACKUP” node is a voting member which provides an event store, but it does not provide the messaging services, so clients will not connect to it. Note that you must have at least one active backup node that needs to be up if you want a guarantee that you have up-to-date backups.

- Lastly, a “PASSIVE_BACKUP” will provide an Event Store but not participate in transactions or even elections, nor provide messaging services. It is up or down will never influence the availability of the context, and the leader will send any events accumulated during maintenance as soon as it comes back online.

From the perspective of a backup strategy, the active backup can keep an offsite copy that is always up-to-date. For example, if you have two active backup nodes, you can stop Axon Server on one of them to back up the event store files, while others will continue receiving updates. The passive backup node provides an alternative strategy, where the context leader will send updates asynchronously. While this does not give you the guarantee that you are always up-to-date, the events will eventually show up, and even with a single backup instance, you can bring Axon Server down and make file backups without affecting the cluster availability. When it comes back online, the leader will immediately start sending the new data.

Access Control

Ok, let’s enable access control. Stop Axon Server with Control-C and add the options:

$ TOKEN=$(uuidgen)

$ echo ${TOKEN}

cf8d5032-4a43-491c-9fbf-f28247f63faf

$ (

> echo axoniq.axonserver.accesscontrol.enabled=true

> echo axoniq.axonserver.accesscontrol.token=${TOKEN}

> ) >> axonserver.properties

$ ./axonserver.jar

_ ____

/ \ __ _____ _ __ / ___| ___ _ ____ _____ _ __

/ _ \ \ \/ / _ \| '_ \\___ \ / _ \ '__\ \ / / _ \ '__|

/ ___ \ > < (_) | | | |___) | __/ | \ V / __/ |

/_/ \_\/_/\_\___/|_| |_|____/ \___|_| \_/ \___|_|

Standard Edition Powered by AxonIQ

version: 4.3

2020-02-20 15:04:13.369 INFO 2469 --- [ main] io.axoniq.axonserver.AxonServer : Starting AxonServer on arrakis with PID 2469 (/mnt/d/dev/AxonIQ/running-axon-server/server/axonserver.jar started by bertl in /mnt/d/dev/AxonIQ/running-axon-server/server)

2020-02-20 15:04:13.376 INFO 2469 --- [ main] io.axoniq.axonserver.AxonServer : No active profile set, falling back to default profiles: default

2020-02-20 15:04:20.181 INFO 2469 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat initialized with port(s): 8024 (http)

2020-02-20 15:04:20.486 INFO 2469 --- [ main] A.i.a.a.c.MessagingPlatformConfiguration : Configuration initialized with SSL DISABLED and access control ENABLED.

2020-02-20 15:04:28.670 INFO 2469 --- [ main] io.axoniq.axonserver.AxonServer : Axon Server version 4.3

2020-02-20 15:04:33.017 INFO 2469 --- [ main] io.axoniq.axonserver.grpc.Gateway : Axon Server Gateway started on port: 8124 - no SSL

2020-02-20 15:04:33.669 INFO 2469 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 8024 (http) with context path ''

2020-02-20 15:04:33.670 INFO 2469 --- [ main] io.axoniq.axonserver.AxonServer : Started AxonServer in 21.481 seconds (JVM running for 22.452)

You can see on the line about the MessagingPlatformConfiguration that access control is now enabled. If you go back to your browser and refresh the page, you’ll be prompted with a login screen, even though we never told it anything about users. Have we outsmarted ourselves? No, here is where the CLI tool comes in with the token. This token is used to allow application access through the REST and gRPC APIs:

$ ./axonserver-cli.jar users

Error processing command 'users' on 'http://localhost:8024/v1/public/users': HTTP/1.1 401 - {"timestamp":1582207331549,"status":401,"error":"Unauthorized","message":"Unauthorized","path":"/v1/public/users"}

$ ./axonserver-cli.jar users -t cf8d5032-4a43-491c-9fbf-f28247f63faf

Name

$ ./axonserver-cli.jar register-user -t cf8d5032-4a43-491c-9fbf-f28247f63faf -u admin -p test -r ADMIN

$ ./axonserver-cli.jar users -t cf8d5032-4a43-491c-9fbf-f28247f63faf

Name

admin

Right; pass the token, and we can talk again. Next, we have created a user with a password and gave it the role “ADMIN.” You can use this user to log in to the UI again. If you look at the log, you’ll notice a new feature of version 4.3: Audit logging.

2020-03-06 10:08:51.806 INFO 59361 --- [nio-8024-exec-3] A.i.a.a.rest.UserRestController : [AuthenticatedApp] Request to list users and their roles.

2020-03-06 10:10:02.834 INFO 59361 --- [nio-8024-exec-7] A.i.a.a.rest.UserRestController : [AuthenticatedApp] Request to create user "admin" with roles [ADMIN@default].

2020-03-06 10:10:02.837 INFO 59361 --- [nio-8024-exec-7] A.i.a.a.rest.UserRestController : [AuthenticatedApp] Create user "admin" with translated roles [ADMIN@default].

2020-03-06 10:10:09.560 INFO 59361 --- [nio-8024-exec-9] A.i.a.a.rest.UserRestController : [AuthenticatedApp] Request to list users and their roles.

Calls to the API are logged with the user performing it, so you can record who did what. Applications using the token (such as the CLI) are indicated by “[AuthenticatedApp],” while actual users are mentioned by username. The capital “A” at the start is a shortened “AUDIT,” so you use logging configuration to send it to a separate log if needed.

Differences with Axon Server EE

Axon Server EE does not use a single token for all applications. Rather, you can register different applications, give each of them access to specific contexts, and generate tokens for you. The CLI is, of course, a special case, as you need some stepping stone to create an initial user, and this is done using a generated token that Axon Server stores locally in a directory named “security.” If you run the CLI from the installation directory of Axon Server, it will know about the token and use it automatically. Specifying the “...accesscontrol.token” setting for Axon Server EE is ignored. You can customize the system token by specifying "...accesscontrol.token-dir" for the directory or "...accesscontrol.systemtokenfile" for a complete path to the file itself. We will come back to this when we visit Kubernetes.

Another special case is a token for communication between individual nodes in a cluster. You can configure this comparable to the token for SE by using the “...accesscontrol.internal-token” key. If you have a running cluster and want to enable access control, be sure to configure this token on all nodes before you start enabling access control. That way, you can keep the restarts limited to a single node at a time and never lose full cluster availability.

Configuring SSL

For enabling SSL, and who doesn’t nowadays, we have two different settings to use, once for each port. The HTTP port uses the generic Spring-Boot configuration settings and requires a Java-compatible Keystore. For the gRPC port, we use standard PEM files. Please note that if you are setting up Axon Server behind a reverse proxy or load balancer, things can get a bit more complex, as you then have several choices in how to terminate the TLS connection. Your proxy may be where you configure a certificate signed by your provider, or you could let it transparently connect through to Axon Server. However, this last option is more complex to get right and makes it more difficult to use that proxy’s capacity for filtering and caching requests. We’re not going into these complexities here and will generate (and use) a self-signed certificate:

$ cat > csr.cfg <This will give us both formats (PEM and PKCS12), with the latter using the alias “axonserver” and the same for a password. To configure these for Axon Server, use:

# SSL enabled for HTTP server

# server.port=8433

server.ssl.key-store-type=PKCS12

server.ssl.key-store=tls.p12

server.ssl.key-store-password=axonserver

server.ssl.key-alias=axonserver

security.require-ssl=true

# SSL enabled for gRPC servers

axoniq.axonserver.ssl.enabled=true

axoniq.axonserver.ssl.cert-chain-file=tls.crt

axoniq.axonserver.ssl.private-key-file=tls.key

When you now restart Axon Server, you’ll see “SSL ENABLED” announced. Trying to connect to the server using the CLI will fail because it uses HTTP rather than HTTPS. By specifying the URL explicitly using -S https://localhost:8024 we are greeted with a new complaint: “Certificate for <localhost> doesn't match any of the subject alternative names: [].” This is due to the certificate being generated for the system’s hostname, while the URL specifies “localhost.” If you connect with the correct hostname, all should go well.

We now have Axon Server with access control and SSL configured.

Differences with Axon Server EE

As can be expected, with Axon Server Enterprise Edition, we have two extra settings for the internal gRPC port; “...ssl.internal-cert-chain-file” and “...ssl.internal-trust-manager-file”. The first is for the PEM certificate to be used for cluster-internal traffic if it is different from the one used for client connections. For example, the most common reason is when the nodes use a different DNS name for internal (cluster node to cluster node) communication than external connections. The second is for a (PEM) Keystore that certifies the other certificates, which may be needed when signed using an authority that is not available from the Java JDK’s CA Keystore.

A thing to remember is that enabling SSL on an Axon Server cluster will require downtime, as the “...ssl.enabled” setting controls both server and client-side code. This is intentional, as it is unreasonable to expect all nodes to have individual settings per node showing which ones communicate using SSL and which do not. Better is to start by enabling this from the first.

Axon Server storage

Axon Server provides both communication and storage services to Axon Framework-enabled client applications. If the client application has no other EventStore configured, the Axon Server connector component will delegate this work to Axon Server. This is an essential feature of Event Sourced applications, where the Event Store functions as a Write-Once-Read-Many database. This aspect is important to remember because no data in the Event Store is ever modified or deleted, and the storage requirements will only ever increase, and choosing a different implementation will not change this. As a consequence, Axon Server has been heavily optimized for this access pattern.

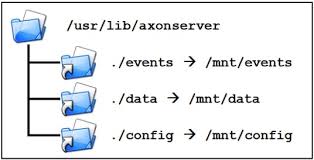

After you start Axon Server for the first time, it will default look in the current directory for a directory named “data,” and inside it a directory “default,” and create them as needed. This is where the events and snapshots for the “default” context will be stored. You can customize the location using the “...event.storage” and “...snapshot.storage” settings. There is also a small database in the “data” directory, referred to as the “ControlDB,” and is used for administrative data. This location you can customize by using the “...controldb-path” setting.

The data in the Event Store can be backed up regularly, and the REST API (and CLI tool) can help you with a list of files that will no longer change. See the reference guide for more details. You can, in fact, simply copy all files, but the “current” event or snapshot file will need to be scanned by Axon Server at the start to verify the correct structure and to find the last committed location, which is why it is not normally added to the backup list. If having a full backup at all times is a strong requirement, it is recommended to go for the (clustered) Enterprise Edition, where you’ll get multiple copies of the store with the option of running one in a different geographical location for additional safety. A backup of the control database can also be requested and delivered as a ZIP file, but after a catastrophic loss, its contents can also be recovered through other means, at the cost of some handwork and a scan of all stored contexts.

Differences with Axon Server EE

The same settings are used for the EE world, with the change that we’ll see more directories than “default” created for events and snapshots. These are the storage locations for other contexts, and they cannot be individually configured. (yet) If you want to change the location for an individual context, you can use OS features such as symbolic links.

Another thing unique for Axon Server EE is the replication log, which stores context data as it comes in and is distributed. These logs will use storage space but get cleaned regularly from data that has been successfully replicated to the other nodes. The storage location for the replication log can be adjusted using the “...replication.log-storage-folder” property. Finally, in contrast to the Event Store, where you will not see it, the “_admin” context does show up here for configuration changes in the cluster. Generally, the “_admin” data will be persisted in the ControlDB.

However, the major difference between SE and EE is that you can have multiple copies of all data stored across the nodes in the cluster, and transactions are only considered committed when a majority of the nodes for the context have reported successful storage. The commit is then also distributed, so a node knows the data should now be considered fixed. Uncommitted data may be discarded in a recovery situation, followed by a resend from the leader of all data after the last known good. Cluster-wide, this will still mean some data could be lost, but it will not have been committed in the first place. If a node is marked for the “active-backup” role in a context, it will be a part of the cluster-wide transaction but not in servicing client applications. Having two active-backup nodes will allow you to bring one down to backup the files while still capturing new transactions on the other. A “passive backup” node is not part of the transaction but follows at a best-effort pace. This is perfect for an offsite backup when communication with it is noticeably slower than with the active nodes, and bringing it down for a full backup of the Event Store will not impact transactions. You can also assign it slower (and cheaper) storage for that reason.

Wrap-up on part one

In this first installment, we looked at the basic settings for Axon Server that are not Axon Framework related, and therefore often overlooked by developers. Let’s be honest; you want to get up and running as soon as possible, and if you are looking at tweaking the Event Store and Messaging Hub, it's most likely about gRPC communications and not SSL certificates. Having to use and maintain valid certificates is never a simple thing, as it involves using a command-line tool such as “OpenSSL,” with its dozens of sub-commands and options and an interface that isn’t easy to automate. Hopefully, this blog will have helped you explain the most relevant settings for professionalizing your Axon Server setup. Now we can start looking at applying this most painlessly. Next time we’ll run Axon Server in Docker and look at how we can easily pass the required information into a standardized Docker container.

Would you like to have a chat with me personally? Please send an email to info@axoniq.io, and we will schedule a call so you can make better decisions for your Axon Server setup.

See you next time!