·

Axoniq Conference Day 2 | 2025 Slaying Three Dragons: Caching, AI and Analytics at Scale

ASSIST shares battle-tested lessons from production: solving deadlocks, achieving 100% cache hit rates with cache size ONE, building AI recommendations from event history, and creating real-time analytics. Watch as Richard and Ondrej reveal how they transformed an automotive spare parts eShop into a high-performance, ML-powered system while supporting millions of events.

🎯 Speakers:

Richard Bouška - CTO, ASSIST

Ondrej Halata - Lead DevOps Engineer, ASSIST

🐉 The Three Dragons Slayed:

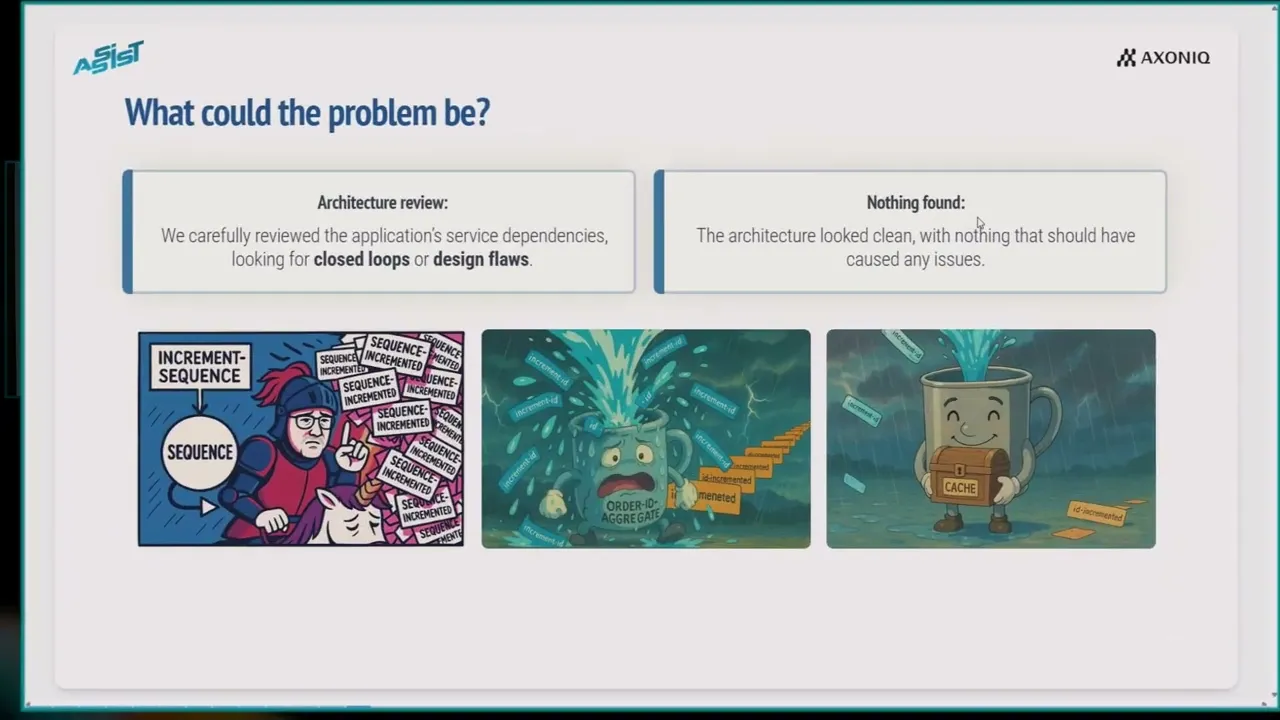

Dragon 1: Circular Dependency Deadlocks

Problem: 10 threads, 5-minute timeout, 3 retries = 20 minutes of silence

Root Cause: Aggregates emitting commands directly

Solution: Choreography pattern with sagas, event-driven communication

Result: Quick deployment, application restructured

Dragon 2: Hydration Performance Crisis

Problem: Sequence generator aggregate with 25K daily events

Numbers: 320M events without snapshots, 6.2M with snapshots

Solution: Consistent hashing plus Caffeine cache

Result: 700ms to 8ms globally distributed, cache size ONE with 100% hit rate

Dragon 3: Tiered Storage Optimization

Problem: Growing event count, older tiers slower

Discovery: Power law distribution, older events less visited

Solution: Aggressive caching stabilized server open segments

Result: Flat performance with growing events since July

💻 Tech Stack:

Axon Framework 4 and Server Cluster

Caffeine Cache for aggregate caching

Consistent Hashing for routing stability

PostgreSQL for analytical projections

Grafana for real-time dashboards

Word2Vec neural network for ML

AWS infrastructure with S3 and SQS

🚀 Performance Breakthrough:

Before Optimization: 700ms per sequence ID

With Snapshots (every 500): 70ms

With Caffeine Cache: 8ms

Cache Hit Rate: 100% with size ONE

Other Aggregates: 80-90% hit rates with 1,000 entries

🎯 The Unicorn Moment:

Server Open Segments chart shows breakthrough - flat performance since July despite growing events. Power law distribution means older events rarely accessed. Cache handles hot aggregates, tiered storage cost-effective for cold data.

🎓 Key Lessons:

Consistent hashing critical but watch topology changes

Axonic 2000 error provides retry compensation

Power law distribution = aggressive caching wins

Event history enables ML without data engineering

Real-time analytics trivial with projection services

Observability standard tooling at Asasis

🚫 What NOT To Do:

❌ Commands from aggregates (use choreography)

❌ Ignoring consistent hashing topology changes

❌ Forgetting snapshots on long-living aggregates

❌ Missing cache opportunities (power law applies!)

❌ Waiting 24 hours for analytics (project real-time!)